Set the Stage: Enabling Storytelling with Multiple Robots through Roleplaying Metaphors

The ACM Symposium on User Interface Software and Technology (UIST 2024)

Background/Summary/Abstract

Gestures are an expressive input modality for controlling multiple robots, but their use is often limited by rigid mappings and recognition constraints. To move beyond these limitations, we propose roleplaying metaphors as a scaffold for designing richer interactions. By introducing three roles: Director, Puppeteer, and Wizard, we demonstrate how narrative framing can guide the creation of diverse gesture sets and interaction styles. These roles enable a variety of scenarios, showing how roleplay can unlock new possibilities for multi-robot systems. Our approach emphasizes creativity, expressiveness, and intuitiveness as key elements for future human-robot interaction design.

Robot Design

At its core is an ESP32 microcontroller, which handles motor control and wireless communication via built-in Wi-Fi and Bluetooth. It drives a pair of 150 RPM N20 DC gear motors through a TB6612FNG motor driver, allowing precise speed and direction control.

Each motor is paired with a 43 mm rubber wheel, providing smooth traction, while a caster ball wheel at the front adds stability and enables tight turns. The entire system is powered by a 3.7V LiPo battery, compact enough for mobility but sufficient for extended runtime.

Set the Stage

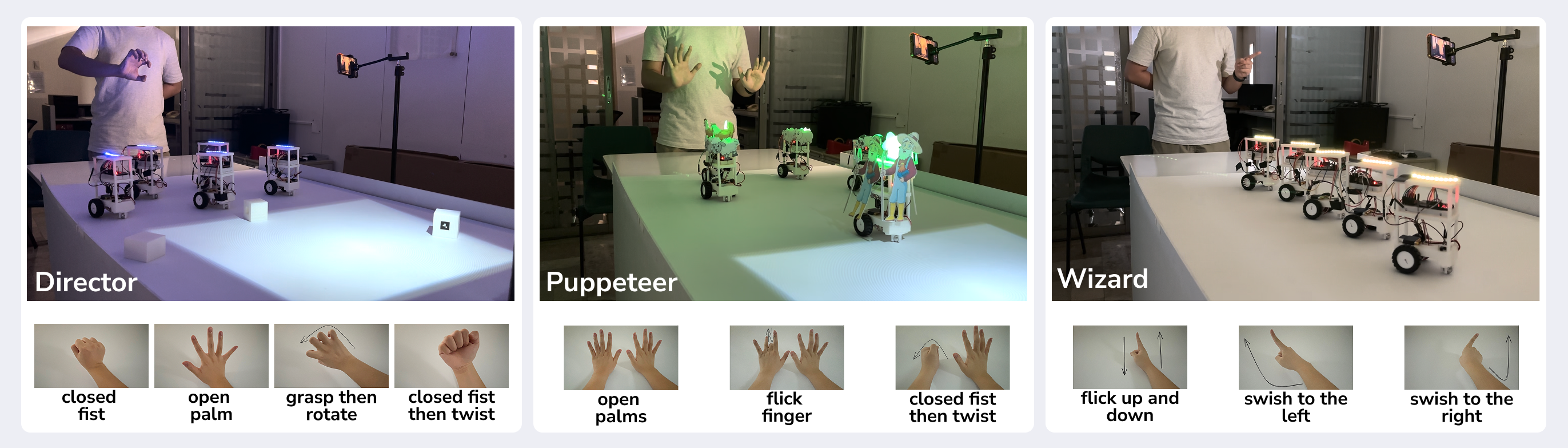

Set the Stage uses three narrative roles namely Director, Puppeteer, and Wizard to organize and re-purpose a limited set of gestures into meaningful, context-driven robot behaviors. These metaphors offer users distinct modes of interaction, each framing gesture used in a different way and enabling varied applications despite working with the same foundational gesture set.

Director

As a Director, the user guides a group of robots as if they were an ensemble cast on stage. Much like a theater director using hand signals to cue actors, gestures become high-level commands that coordinate collective movement.

An open palm pushed forward sends the group gliding ahead; a pulled-back fist makes them reverse. A grasp followed by a rotating wrist turns the group left or right. These simple motions allow the Director to navigate the ensemble through a scene, avoiding obstacles, hitting “spotlight” positions, and shaping a clear, story-driven spatial flow.

The metaphor extends beyond navigation. By chaining these same gestures into timed sequences, forward, back, rotate, the Director can orchestrate synchronized, dance-like performances. With consistent timing and spacing, the robots shift from functional movement to expressive choreography, turning the stage into a dance floor where every motion carries narrative weight.

Puppeteer

Unlike the Director’s broad, sweeping cues, the Puppeteer operates on a more detailed level, controlling individual robots with finger-based gestures. Eachrobot is mapped to a specific finger, transforming the user’s hand into a kind of living marionette controller. A flick of the index finger sends one robot moving forward, while a twist of the wrist with a clenched fist can trigger a coordinated group movement.

In our implementation, these robots take on character roles from the classic children’s song Old MacDonald Had a Farm, with the Puppeteer guiding them through a sequenced performance. This role highlights how a small, consistent gesture vocabulary can be adapted for precise, character-driven control.

But what if the marionette metaphor didn’t stop at just the robots? Since individual fingers already act as direct links to characters, this approach could easily extend to other elements of the stage. Imagine using finger gestures to trigger lighting changes, move props, or cue sound effects. The user wouldn’t just animate characters, they’d direct the entire scene, switching between robot control and stage management seamlessly.

By blending performance and control into one intuitive interface, the Puppeteer role opens up new possibilities for storytelling, where every gesture helps build a richer, more immersive narrative experience.

Wizard

In the Wizard role, gestures take on a magical quality. The user’s index finger becomes a wand, casting directional swishes and flicks to trigger dramatic visual and behavioral effects. A quick vertical flick turns the robots’ lights on or off, while sweeping horizontal gestures send them gliding and spinning across the stage in unison.

Here, gestures aren’t just commands, they’re spells. Each motion conjures a burst of synchronized light, sound, or movement, transforming the robot ensemble into a responsive, animated display. In our prototype, one spell-like sequence mimics a lightning strike: a series of rapid flicks triggers pulsing lights and sudden movement, creating an audiovisual effect that feels alive and reactive.

But what happens when we combine the Wizard’s sweeping magic with the Puppeteer’s precise control? Imagine using your fingers to guide individual robots into place, just like a puppeteer, but then swishing your hand to illuminate them, cue music, or set an entire scene in motion. The same gestures could tell stories both subtle and spectacular: constellations coming to life, fairies bestowing blessings, or even drones aligning for a rescue mission.

The magic lies in the simplicity. Swish, flick, poke, repeat. Just like your fairy godmother might have done, but under the surface, these gestures form a powerful, expressive language for multi-robot storytelling.